Projects

Reidentifying Medicare

With colleagues Teague and Culnane, I helped uncover one of the largest privacy breaches in Australian history 2016–17. Federal health and human services in mid-2016 released an open dataset of 30 years of Medicare and Pharmaceutical Benefits Schemes transaction records, for 10% of the Australian population. The intention was to drive health economics research, for evidence-based policy development. Unfortunately minimal privacy protections were in place, while the data reported sensitive treatments e.g., for AIDS, late-term abortions, etc. Initially we completely reidentified doctors, due to improper hashing of their IDs. As a result the dataset was taken offline and a public statement released by the Department. It could not be recalled. A year later we announced we had reidentified patients such as well-known figures in Australian sport and politics.

The day after Medicare’s retraction, the Attorney General published a plan to legislate against reidentification of Commonwealth datasets. In the months to come the Reidentification Criminal Offence Bill (an amendment to the Privacy Act 1988) was introduced to Parliament criminalising the act of reidentification, unless with prior permission. The bill, if passed, would be retroactively applied and reverse the burden of proof on accused. While stifling security experts and journalists responsibly disclosing existing privacy breaches to the government, the bill would not prevent private corporations or foreign entities outside Australian jurisdiction from misusing Commonwealth data. Of 15 submissions to the ensuing Parliamentary Inquiry examining the appropriateness of the bill, 14 were against including the Law Council of Australia, Australian Bankers’ Association, and EFF. Our submission to the inquiry achieved significant impact, being directly quoted 9 times in the Senate Committee’s final report. We wrote an Op-Ed in the Sydney Morning Herald clearly explaining why criminalising reidentification would do more harm than good.

Reidentifying Myki

Colleagues Culnane, Teague and I demonstrated a significant

reidentification of public transport users, in a 2018 datathon dataset

of fine-grained touch-on touch-off events on Victorian public transport

(the Myki card). We were able to find ourselves, triangulate a colleague

based on a single cotravelling event, and a State MP through linking

with social media postings. The privacy breach was significant:

it would be easy for stalkers to learn when children travel alone, where

survivors of domestic abuse now live, etc. from the release. The

Victorian privacy commissioner OVIC’s

investigation found PTV had broken state laws.

Colleagues Culnane, Teague and I demonstrated a significant

reidentification of public transport users, in a 2018 datathon dataset

of fine-grained touch-on touch-off events on Victorian public transport

(the Myki card). We were able to find ourselves, triangulate a colleague

based on a single cotravelling event, and a State MP through linking

with social media postings. The privacy breach was significant:

it would be easy for stalkers to learn when children travel alone, where

survivors of domestic abuse now live, etc. from the release. The

Victorian privacy commissioner OVIC’s

investigation found PTV had broken state laws.

Privacy assessments

With colleagues Ohrimenko, Culnane, Teague, I have contributed towards several technical privacy assessments of government data initiatives and privacy sector projects. Contracted by the Australian Bureau of Statistics (ABS), we have for example analysed the privacy of several options for name encoding for private record linkage—as might be used for Australian Census data for example. For Transport for NSW, we have performed a technical privacy assessment of a Data61-processed dataset of Opal transport card bus, train, ferry touch ons/offs again under contract. The data has subsequently been published. We have also discovered vulnerabilities in the hashing methodology published by the UK Office of National Statistics in a third privacy assessment (explained here). These are non-exhaustive examples of technical assessments performed by the group. Common themes to this work are reflected in our 2018 report for the Office of the Victorian Information Commissioner. These and broader issues around robustness of AI are summarised in an OVIC book chapter.

COVIDSafe app privacy

In 2020, the COVID-19 pandemic swept across the world with devistating

consequences. An important strategy for slowing the spread of the

coronavirus is contact tracing, which traditionally had been manual,

laborious but accurate. Naturally many governments hoped that high

uptake of Bluetooth-enabled smart phones could be leveraged for

automated contact tracing, supporting over-burdened human contact traces

if and when COVID-19 put strain on health systems. In March Australia

opted to adopt and adapt Singapore’s Bluetooth contact tracing system

TraceTogether in its app called COVIDSafe. While the contact tracing effectiveness

of

the

system

has since come in to focus, many tech commentators and researchers

identified seemingly unnecessary compromise in the system’s privacy

provisions. I reported

of these privacy critiques with colleagues Farokhi (Melbourne),

Asghar and Kaafar (Macquarie), a report that was cited by the

government’s COVIDSafe

PIA. Like many other experts, we aimed to highlight the flexibility

and greater privacy of decentralised approaches. With colleagues Leins

and Culnane (Melbourne), we wrote

in the MJA on a broader range of issues on the techno-legal and

ethical dilemma’s for automated contact tracing. For more up to date

detail on the COVIDSafe implementation interested readers should check

out the thorough posts by Vanessa et

al..

In 2020, the COVID-19 pandemic swept across the world with devistating

consequences. An important strategy for slowing the spread of the

coronavirus is contact tracing, which traditionally had been manual,

laborious but accurate. Naturally many governments hoped that high

uptake of Bluetooth-enabled smart phones could be leveraged for

automated contact tracing, supporting over-burdened human contact traces

if and when COVID-19 put strain on health systems. In March Australia

opted to adopt and adapt Singapore’s Bluetooth contact tracing system

TraceTogether in its app called COVIDSafe. While the contact tracing effectiveness

of

the

system

has since come in to focus, many tech commentators and researchers

identified seemingly unnecessary compromise in the system’s privacy

provisions. I reported

of these privacy critiques with colleagues Farokhi (Melbourne),

Asghar and Kaafar (Macquarie), a report that was cited by the

government’s COVIDSafe

PIA. Like many other experts, we aimed to highlight the flexibility

and greater privacy of decentralised approaches. With colleagues Leins

and Culnane (Melbourne), we wrote

in the MJA on a broader range of issues on the techno-legal and

ethical dilemma’s for automated contact tracing. For more up to date

detail on the COVIDSafe implementation interested readers should check

out the thorough posts by Vanessa et

al..

ABS & US Census linking

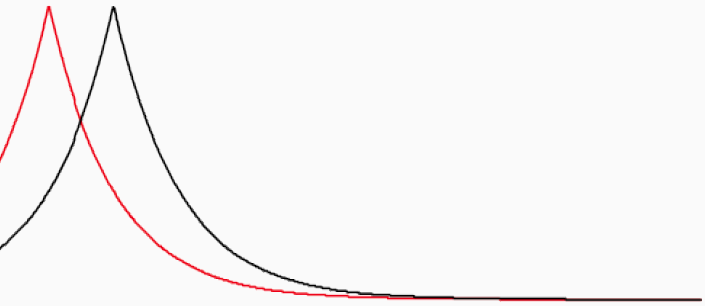

Data linkage has many public interest uses which demand accuracy but

also proper representation of uncertianty. The framework of Bayesian

probability is ideal for incorporating uncertainty into data linking,

however techniques in the framework are often different to scale to

large or even moderate sized dataset. With the Australian Bureau of

Statistics and U.S. Census Bureau, student Marchant (Melbourne),

colleagues Steorts (Duke), Kaplan (Colorado State) and I adapted the

blink Bayesian model of Steorts for data linking, to be

amenable to parallel inference. Our resulting unsupervised end-to-end

Bayesian record linkage system d-blink, open-sourced running on

Apache Spark, enjoys efficiency gains of up to 300x. Maintaining

uncertainty throughout the linkage process enables uncertainty

propagation to downstream data analysis tasks potentially leading to

accuracy gains. Tests on ABS and US Census (State of Wyoming: 2010

Decennial Census with administrative records from the Social Security

Administration’s Numident) data are reported in the JCGS

paper.

Microsoft data linking

Over 2010–13, I was one of two researchers and a small handful of developers, building a production system for data integration—an application of machine learning in databases that leveraged our research at Microsoft e.g., [VLDB’12]. The system shipped multiple times internally (resulting in 4x ShipIt! awards for sustained product transfer). Notable applications were to the Bing Search engine across multiple verticals, and the Xbox game console. After the 2011/12 refresh, in which our data integration was a key contribution from Research, Xbox revenue increased by several $100m (due to increased sales of consoles and Xbox Live subscriptions). Within Microsoft Research, this impact was attributed to our small team. In Bing’s social vertical, our system matched over 1b records daily. I continue to work on data integration at Melbourne.

Transplant failures

Through 2016 my group with colleague Bailey collaborated with the Austin Hospital’s transplantation unit, on predicting outcomes (graft failure) of liver transplantation for Australian demographics. With machine learning-based approaches, PhD student Yamuna Kankanige could improve by over 20% the predictive accuracy of the Donor Risk Index [Transplantation’17]—a risk score widely used by Australian surgeons today, in planning transplants and follow-up interventions.

Cheating at Kaggle

In 2011 with Narayanan (now Princeton) and Shi (now Cornell), I helped demonstrate the power of privacy attacks to Kaggle (a $16m Series A, Google acquired platform for crowdsourcing machine learning) [IJCNN’11]. After determining the source of an anonymised social network dataset, intended for use in a link prediction contest, we downloaded and linked it to the competition test set. Normally a linkage attack would end there, having re-identified users. We used it to look up correct test answers and win the competition by ‘cheating’. No privacy breach resulted and contestants remained able to compete. However the result raised awareness for Kaggle, to the stark reality of privacy attacks. Team member Narayanan subsequently consulted on the privacy of the $3m Heritage Health Prize dataset.

Firefox side-channel

With a Berkeley group led by Dawn Song [report], I helped improve the security of Mozilla’s open-source development processes. While open-source projects tend to improve system security through the principle of ‘many eyes’, Mozilla was publishing security-related commits to the public Firefox web browser source repository, often a month before those commits would be automatically pushed to users. We trained a learning-based ranker to predict which commits were more likely security-related. An attacker could then easily sift through a few commits by hand to find zero-day exploits, on average a month prior to patching. As a result of our work Mozilla made security-related commits private until they were published as patches.

Media Coverage

- 2020, Australia’s COVIDSafe app: ZDNet, The Guardian, The Australian, SBS News, Gizmodo, ACS InformationAge

- 2019 (1m+ views), Reidentification of the Myki transport data release: named a top-2 story of 2019 by ComputerWeekly, Australian Financial Review, 3AW radio, ABC Online, Guardian Australia, 9News.com.au, Canberra Times, Australian Financial Review, ZDNet, Herald Sun, The Age, The Australian, The Mandarin, and many more

- 2018–19, OVIC commissioned writing: book on AI ethics The Mandarin; and report on de-identification The Mandarin

- 2017, Liver transplantation outcome prediction: 9news, heraldsun

- 2017–18 (1m+ views), Further reidentification of the Australian Medicare/Pharmaceutical (MBS/PBS) 10% release: ABC, Sydney Morning Herald, IT News, ZdNet, The Register, SBS News, Business Insider, News.com.au, Daily Telegraph, Brisbane Times, Computer World, LifeHacker, BoingBoing, Northern Star, BuzzFeed, itnews

- 2016, Reidentification of the Australian Medicare/Pharmaceutical (MBS/PBS) 10% release: zdnet (again), The Register, itnews (again), ABS news, The Guardian, The Age, CSO, HuffPo, Canberra Times, Crickey, ComputerWorld, Gizmodo, Digital Rights Watch, The Saturday Paper

Note: view estimates are wildly approximate, sometimes “cumulative” overestimates.

Policy Submissions

I have co-authored submissions to 12 policy and legislation consultations and inquiries run by government departments and agencies. These responses highlight challenges and best-practice solutions in data privacy and AI.

- Chris Culnane, Ben Rubinstein. Submission to the Review of the Privacy Act 1988 submitted to the Attorney-General’s Department, 29/11/2020.

- Ben Rubinstein. Response to the Data Strategy Consultation Paper of the COAG Energy Council’s Energy Security Board submitted to the Energy Security Board, COAG Energy Council, 27/11/2020.

- Chris Culnane, Ben Rubinstein. Consultation Response ‘Data Availability and Transparency Bill’ submitted to the Office of the National Data Commissioner, 6/11/2020.

- Ben Rubinstein. Data stakeholder consultation (oral) in response to Use of Administrative Data in the 2021 Census of Population and Housing, Australian Bureau of Statistics, 17/02/2020.

- Claire Benn, Robert C. Williamson, Ben Rubinstein, Chris Culnane. Privacy, Consent and Trust: Response to the Data Sharing and Release Legislative Reform Discussion Paper submitted to the Office of the National Data Commissioner, 15/10/2019.

- Chris Culnane, Benjamin Rubinstein, Vanessa Teague. Data Sharing and Release submitted to the Office of the National Data Commissioner, 15/10/2019.

- University of Melbourne. Artificial Intelligence: Australia’s Ethics Framework - University of Melbourne Response submitted to the Department of Industry, Innovation and Science, 6/06/2019.

- Chris Culnane, Benjamin Rubinstein, Cynthia Sear, Vanessa Teague. Submission to the ACCC Consultation on the Exposure Draft of CDR draft rules (banking) submitted to the ACCC, 17/05/2019.

- Chris Culnane, Benjamin Rubinstein, Vanessa Teague. Submission to the Senate Inquiry into the My Health Record System submitted to the Senate Community Affairs References Committee, 14/09/2018.

- Chris Culnane, Benjamin Rubinstein, Vanessa Teague. Data Sharing and Release Legislation Issues Paper Consultation Response submitted to the Department of the Prime Minister and Cabinet, 4/07/2018.

- Chris Culnane, Benjamin Rubinstein, Vanessa Teague. Response to the Productivity Commission’s draft report on data availability and use submitted to the Productivity Commission, 16/12/2016.

- Chris Culnane, Benjamin Rubinstein, Vanessa Teague. Comments on the proposed amendments to the Privacy Act to criminalise reidentification submitted to the Senate Legal and Constitutional Affairs Committee, 27/11/2016.

As member of the Australian Academy of Science’s NCICS I contributed to the Digital Futures strategic plan.

Funding & Awards

Funding

Since arriving at the University of Melbourne Oct 2013, I have been awarded competitive funding (Cat 1–4) of $11.75m total, $7.45m as lead-CI, $3.46m on a per-CI basis. Funding includes:

- 2025–2026 $150k: Google Research Funding Agreement, From Data to Rapid Insights with Intelligent AI-Powered Databases, Renata Borovica-Gajic, Benjamin Rubinstein.

- 2025–2028 $659k: Office of National Intelligence National Intelligence Discovery Grants 2025, Olga Ohrimenko, Renata Borovica-Gajic, Benjamin Rubinstein.

- 2025 $267k: Google Research Funding Agreement, Improving the Quality of Generated Fuzz Drivers, Van-Thuan Pham, Toby Murray, Benjamin Rubinstein.

- 2024–2026 $1.25m: DSTG/ASCA Research Agreement Variation, Adversarial Reinforcement Learning: Attacks and Defences, Benjamin Rubinstein et al.

- 2022–2023 $215k: Google Research Funding Agreement, Selective Dataflow-Guided Fuzzing, Van-Thuan Pham, Toby Murray, Benjamin Rubinstein.

- 2022–2025 $589k: Office of National Intelligence National Intelligence and Security Discovery Research Grants 2022, James Bailey, Michele Trenti, Richard Sinnott, Benjamin Rubinstein, Krista Ehinger.

- 2022–2024 $405k: Australian Research Council Discovery Project, Benjamin Rubinstein.

- 2022–2023 $304k: Defence Science & Technology Group Research Agreement, Adversarial Reinforcement Learning: Attacks and Defences, Benjamin Rubinstein, Andrew Cullen, Chris Leckie, Tansu Alpcan, Sarah Erfani.

- 2022–2023 $167k: Data61/CSIRO Collaboration Research Project, Adversarial Machine Learning for Cyber (extension), Benjamin Rubinstein, Andrew Cullen, Chris Leckie, Tansu Alpcan, Sarah Erfani.

- 2021–2024 $3m: Department of Industry, Science, Energy and Resources AUSMURI, Cybersecurity Assurance for Teams of Computers and Humans (CATCH), Ben Rubinstein et al. Joint with AUD$5m ARO MURI co-led by Somesh Jha see catch-muri.org for full team

- 2020–2021 $34k: National Australia Bank Consulting Services Agreement, Data privacy assessment, Ben Rubinstein, Olga Ohrimenko.

- 2020–2021 $30k: Australian Bureau of Statistics Research Contract, Differential privay in a dynamic query environment, Ben Rubinstein, Olga Ohrimenko.

- 2020–2021 $43k: Facebook 2020 Probability and Programming Research Award, Formalizing and verifying fair data use in collaborative machine learning, Olga Ohrimenko, Ben Rubinstein, Toby Murray.

- 2020–2021 $358k: Facebook Sponsored Research Agreement, Adversarial attacks on machine translation, and their defenses, Trevor Cohn, Benjamin Rubinstein.

- 2020 $237k: Consunet and Defence Science & Technology Group Defence CRC, Distributed Autonomous Spectrum Management (DUST) II, Benjamin Rubinstein, Tansu Alpcan.

- 2019–2020 $237k: Consunet and Defence Science & Technology Group Defence CRC, Distributed Autonomous Spectrum Management (DUST) I, Tansu Alpcan, Benjamin Rubinstein.

- 2019–2021 $62k: Defence Science & Technology Group and Data61/CSIRO Next Gen Tech Fund CRP, Towards Robust Learning Systems via Amortized Optimization and Domain Adaptation, Dinh Phung et al.

- 2018–2019 $50k: Australian Bureau of Statistics Research Contract, Disclosure Risk Analysis, Chris Culnane, Benjamin Rubinstein.

- 2018–2021 $490k: U.S. Army Research Office Research Grant, Towards designing complex networks resilient to stealthy attack and cascading failure, Antoinette Tordesillas, Benjamin Rubinstein, James Bailey, Howard Bondell.

- 2018–2019 $24k: Mondo Power AMSI internship program, Anomaly detection in time series energy consumption data, Benjamin Rubinstein, Leyla Roohi.

- 2018 $31k: Australia Bureau of Statistics Research Contract, Scaling up Bayesian record linkage, Benjamin Rubinstein, Neil Marchant.

- 2018–2019 $77k: Oceania Cyber Security Centre Seed Grant, Detection of Infected Internet-of-Thing Devices to Prevent Distributed Denial of Service Attacks, Sarah Erfani et al.

- 2017–2022 $1.014m: Defence Science & Technology Group and Data61/CSIRO Next Gen Tech Fund CRP, Adversarial Machine Learning for Cyber, Benjamin Rubinstein, Chris Leckie, Tansu Alpcan, Sarah Erfani, Jun Zhang.

- 2017–2021 $970k: Department of Education and Training Academic Centre for Cyber Security Excellence (ACCSE), Chris Leckie et al.

- 2017–2018 $93k: Defence Science & Technology Group Research Contract, Tactical Security and Health in Multi-Modal Sensor Control and Management, Iman Shames, Benjamin Rubinstein, Farhad Farokhii.

- 2017–2018 $24k: Australia Bureau of Statistics AMSI internship program, Evaluating feasibility of Bayesian entity resolution, Benjamin Rubinstein, Neil Marchant.

- 2017 $168k: Australian Bureau of Statistics Research Contract, Design of securely encrypted (anonymised) linkage keys, Benjamin Rubinstein, Chris Culnane, Vanessa Teague.

- 2017 $30k: Transport for NSW Research Contract, Analysis of privacy protections in Transport for NSW Opal data, Benjamin Rubinstein, Chris Culnane, Vanessa Teague.

- 2017 $35k: Office of the Commissioner for Privacy and Data Protection Research Contract, Implications of de-identification of personal information and impact of de-identification on the Privacy & Data Protection Act (Vic) 2014, Vanessa Teague, Chris Culnane, Benjamin Rubinstein.

- 2016–2018 $370k: Australian Research Council Discovery Early Career Researcher Award (DECRA), Secure and Private Machine Learning, Benjamin Rubinstein.

- 2016–2018 $85k: University of Melbourne DECRA Establishment Grant, Secure and Private Machine Learning, Benjamin Rubinstein.

- 2015 $48k: Melbourne Networked Society Institute Seed Grant, Active Defence, Benjamin Rubinstein et al. Pursuit article

- 2015–2016 $128k: FLI Project Grant, Security Evaluation of Machine Learning Systems, Benjamin Rubinstein. Funds backed by Elon Musk, media: vice news.com.au pursuit

- 2015 $20k: Microsoft Research Azure Machine Learning Award, Big data preparation, Benjamin Rubinstein. In kind support

- 2015–2017 $216k: Australian Research Council Discovery Project, Benjamin Rubinstein. First early-career sole-CI in FOR08, nationally, for 3 years

- 2014 $39k: University of Melbourne ECR Grant, Adversarial Machine Learning, Benjamin Rubinstein.

- 2014 $5k: Amazon AWS Machine Learning Grant, Adversarial Machine Learning, Benjamin Rubinstein.

Awards & Honours

- Bug Bounty Honorable Mention (2021), Google, Attacks on Google differential privacy library. Corresponding Facebook/Meta Opacus patch

- Excellence in Research Award (2021), School of Computing and Information Systems, Univeristy of Melbourne

- Best Reviewer Award (2018,2019), Conference on Neural Information Processing Systems (NeurIPS formerly NIPS)

- WiE Best Postgrad Paper Prize (2017), IEEE Australia Council for PhD student Maryam Fanaeepour’s joint work

- Victorian Young Tall Poppy Science Award (2016), Australian Institute of Policy & Science

- Microsoft Azure ML Award (2015), Microsoft Research

- Excellence in Research Award (2014), Dept. of Computing and Information Systems, University of Melbourne

- Gold Star Award (2011), Microsoft Research, top employee accolade

- ShipIt! Awards (2010–12, four times), Microsoft, each for sustained product transfer

- Yahoo! Key Scientific Challenge Prize (2009), Adversarial Machine Learning

- Siebel Scholars Fellowship (2009), Siebel Foundation, final year graduate fellowship

- Best Poster Award (2008), 11th Int. Symp. Recent Advances in Intrusion Detection (RAID’08)

- UC Regents University Fellowship (2004–05), UC Berkeley, first year graduate fellowship

- IEEE Computer Society Larson Best Paper Prize (2002), ugrad papers worldwide for

Service

Advisory

- Member (2023–), Kingston AI Group. link

- Member (2015–25), National Committee for Information and Communication Sciences, the Australian Academy of Science. link

- Member Judging Panel (2023), Falling Walls Lab Sydney

- Member (2021–23), Go8 Computing, Formerly Australian Computing Research Alliance (ACRA). See e.g., the statement on venue rankings in computer science research.

- Member (2019–21), Methodology Advisory Committee, Australian Bureau of Statistics.

- Member (2020–21), Ranking Advisory Committee, Computing Research and Education Association of Australasia (CORE).

Institutional

- Deputy Dean Research (interim 2024; ongoing 2025–), Faculty of Engineering and Information Technology

- Associate Dean Research (2020–23), Faculty of Engineering and Information Technology

- Co-lead (2021–), AI Group, School of Computing and Information Systems

- Member (2019–), School Executive, School of Computing and Information Systems

- Investigator (2000–), Melbourne Centre for Data Science (MCDS)

- Community Researcher (2000–), Centre for AI and Digital Ethics (CAIDE), Melbourne Law School

Many more committees and working groups at departmental, faculty and university levels.

Program committees

- ICML’2011,’12,’17-18 (PC),’19-21,25 (area chair), NeurIPS (formerly NIPS)’2014,’17-19 (PC),’20,’23-25 (area chair), AAAI’2018 (senior PC), ICDE’2016,’17, AISTATS’2017, CSCML’2017, KDD’2015,’16, CSF’2014 (co-chair AI & Security Track), SIGMOD’2013, IJCAI’2013

- Workshop PCs: 2019 USENIX Security and AI Networking Conference (ScAINet’19) (at USENIX Security), Privacy Preserving Machine Learning (PPML) 2018 (at NeurIPS), ’19 (at CCS), Deep Learning for Security 2018 (at IEEE S&P), AI for Cyber Security (AICS at AAAI)’2017,’18, AI & Security (AISec at CCS)’2009,’10,’13,’15,’16,’17,’18,’19, S+SSPR’2016 (at ICPR), PSDML’2010 (at ECML/PKDD)

- Organiser: ACM AI & Security workshops (AISEC)’2011,’12,’14, Learning, Security & Privacy workshop (at ICML’2014)

- Chair, Demonstration and Workshop Local Arrangements SIGMOD’2015

Speaking engagements

- 12/2021: Invited panelist at the ABS Symposium on Data Access and Privacy, Virtual.

- 06/2019: Invited speaker at the Challenges and New Approaches for Protecting Privacy in Federal Statistical Programs Workshop organised by the Committee on National Statistics (CNSTAT) at the U.S. National Acadmies of Sciences, Engineering, and Medicine, Washington D.C. Talk videos

- 04/2019: Invited speaker at the Privacy and the Science of Data Analysis workshop at the Simons Institute for the Theory of Computing, Berkeley. Talk video.

- 08/2018: Keynote at the GIScience’18 Location Privacy and Security Workshop (LoPaS)

- 06/2018: Panelist, Panel on Data Privacy, Oxford-Melbourne Digital Marketing and Analytics Executive Program.

- 02/2018: Invited speaker at Data61/DSTG Cyber Summer School

- 02/2018: Session chairing Security, Privacy & Trust at AAAI2018

- 10/2017: Invited speaker at the DARPA Safe ML workshop at the Simons Institute for the Theory of Computing, Berkeley.

- 04/2017: Invited speaker at the Melbourne CTO Club (comprising over a dozen CTOs of mid-sized tech firms).

- 03/2017: Speaker at the AMIRA Exploration Managers Conference, RACV Club Healesville.

- 01/2017: Speaking at Telstra (data science)

- 11/2016: Speaker/panelist at the public lecture Human and Machine Judgement and Interaction Symposium, Uni Melbourne

- 05/2016: Invited speaker at the National Fintech Cyber Security Summit at the Ivy, Sydney hosted by Data61, Stone & Chalk, the Chief Scientist of Australia.

- 04/2016: Speaking at Telstra (data science)

- 02/2016: Speaking at Samsung Research America and UC Berkeley.

- 02/2016: Speaking in two exciting panels at AAAI’2016 on keeping AI beneficial and challenges for AI in cyber operations.

- 12/2015: Plenary at the 12th Engineering Mathematics and Applications Conference (EMAC’2015) the biennial meeting of the EMG special interest group of ANZIAM

- 07/2015: Keynote at the Australian Academy of Science Elizabeth and Frederick White Research Conference on Mining Data for Detection and Prediction of Failure in Geomaterial

- 11/2014: Facebook (Menlo Park) talk Data Integration through the Lens of Statistical Learning